USB superspeed peripherals Forum Discussions

Hello.

Now I am looking at an example for a CYUSB3KIT-003 kit.

The example is called SRAM.

"3.6.2 SRAM Example"

In source code there is example of reading from memory(file "cyfxsram.c").

CyU3PDebugPrint(4,"Read\n\r");

apiRetStatus = CyU3PGpifSMSwitch (256, START1, 256, 0, 0);

if(apiRetStatus != CY_U3P_SUCCESS)

{

CyU3PDebugPrint (CY_FX_DEBUG_PRIORITY, "CyU3PGpifSMSwitch failed, Error code = %d\r\n", apiRetStatus);

}

CyU3PUsbAckSetup ();

isHandled = CyTrue;

But there is no example of writing to memory.

Tell me, is it so?

How can I write to memory?

Show LessHi all,

I want to configure the board as follows.

FPGA <-> FX3 <-> PC (USB 3.1 Gen 1)

At this time, the interface between the FPGA and the FX3 is conceived as a stream in method of the slave FIFO.

The main function is to transfer video data to PC, but I want to use UART communication together.

In case of not transmitting video data, UART communication between PC, FX3 and FPGA is tried to send and receive commands.

If you can, please let me know if you can configure the board in this way.

Show LessGood day.

We made a custom video camera on chip CX3 similar to devkit Denebola.

Sometimes, when running, the current consumption of the microcontroller reaches 1 ampere.

Tell me please, what can it be connected with?

With the wrong setting of clock frequencies and clocking scheme (in particular, plt)?

Or are other reasons possible?

Thank you very much.

Show LessHi! I am trying to do next thing:

After receiving some signal over GPIO, I have to suspend data flow from GPIF to USB in AUTO DMA mode. But I can“t get access buffer to write a signature of that signal. My code is like this/

// Notification event callback

void DmaNotificationEvent(CyU3PDmaChannel *handle, CyU3PDmaCbType_t type, CyU3PDmaCBInput_t *input)

{

if(type == CY_U3P_DMA_CB_CONS_SUSP)

{

}

}

That is code where I“m configuring AUTO DMA channel:

/* Create a DMA AUTO channel for the GPIF to USB transfer. */

CyU3PMemSet ((uint8_t *)&dmaCfg, 0, sizeof (dmaCfg));

dmaCfg.size = CY_FX_DMA_BUF_SIZE;

dmaCfg.count = CY_FX_DMA_BUF_COUNT;

dmaCfg.prodSckId = CY_FX_GPIF_PRODUCER_SOCKET;

dmaCfg.consSckId = CY_FX_EP_CONSUMER_SOCKET;

dmaCfg.dmaMode = CY_U3P_DMA_MODE_BYTE;

dmaCfg.notification = CY_U3P_DMA_CB_CONS_SUSP;

dmaCfg.cb = DmaNotificationEvent;

dmaCfg.prodHeader = 0;

dmaCfg.prodFooter = 0;

dmaCfg.consHeader = 0;

dmaCfg.prodAvailCount = 0;

apiRetStatus = CyU3PDmaChannelCreate (&glDmaChHandle, CY_U3P_DMA_TYPE_AUTO, &dmaCfg);

if (apiRetStatus != CY_U3P_SUCCESS)

{

CyU3PDebugPrint (4, "CyU3PDmaChannelCreate failed, Error code = %d\n", apiRetStatus);

CyFxAppErrorHandler(apiRetStatus);

}

/* Set DMA Channel transfer size */

apiRetStatus = CyU3PDmaChannelSetXfer (&glDmaChHandle, CY_FX_GPIFTOUSB_DMA_TX_SIZE);

if (apiRetStatus != CY_U3P_SUCCESS)

{

CyU3PDebugPrint (4, "CyU3PDmaChannelSetXfer failed, Error code = %d\n", apiRetStatus);

CyFxAppErrorHandler(apiRetStatus);

}

From my thread routine I“m calling that function:

apiRetStatus = CyU3PDmaChannelSetSuspend(&glDmaChHandle, CY_U3P_DMA_SCK_SUSP_NONE, CY_U3P_DMA_SCK_SUSP_CONS_PARTIAL_BUF);

and nothing happens. No call to DmaNotificationEvent() with CY_U3P_DMA_CB_CONS_SUSP event. What is the reason? I could not find any information on that situation. Thank you for your reply in advance!

I have a USB/UART bridge implemented with a MANUAL dma channel. The producer socket is the UART and the consumer is a USB out EP. I want to send debug messages to this consumer socket so that the terminal on the receiving PC will show these messages. The UART/USB bridge currently works (I am testing using a UART loopback). I found in another thread that the CyU3PDmaChannelSetupSendBuffer command can be used to send a different buffer to the consumer socket of a DMA channel.

I've tried this approach but I am not seeing any of the data sent via this method being sent. The UART/USB bridge loopback works fine. I have two terminals open on my pc. One is the USB/UART bridge and the other is connected to the UART RX/TX pins of the FX3. I can type in one terminal and watch the characters appear in the other going both directions.

Is there something I am missing with the parameters of the DMA channel (buffer size, count, type) that would affect the CyU3PDmaChannelSetupSendBuffer API call?

Is there an approach where I can configure firmware logging capability with CyU3PDebugInit to set the socket and still use the CyU3PDebugPrint() function?

Show LessHello,

I'm using CYUSB3014. I need to upload data from FPGA via CYUSB3014 to PC. I use slave_fifo mode and I use FPGA test procedure 'fpga_slavefifo2b_verilog_ok' from cypress, I use the "Streamer" software from cypress, and the Packets per Xfer is 8, the packet length is 8192 bytes, then I see the Transfer Rate is about 120MB/s. But, when I upload the data,which speed is constant 80MB/s. When upload some packets(8*8192bytes) , then I find the sigal "flagb" will keep 0 for some time ,which will result in missing some packetscan not upload. I want to know Why did this happen? is it in sometimes the speed is very low? if I should add a big fifo or DDR to buffer my data. thanks!

I want to use the Cypress USB 3.0 Dev Kit to push out a 5gb signal and measure that signal on various analyzers. I stumbled onto the sourcing project and I'm starting to learn how it works. Since I'm in a time crunch I'm hoping I can get some feedback on if what I want to do is even possible and any tips on how to get it done.

How can I force the dev kit to just send out a bit sequence repeatedly with no endpoint (not connected to PC)? The end of the cable will go into a breakout board (which will provide power) such that I can tap into the data bus signals and measure them on my scope.

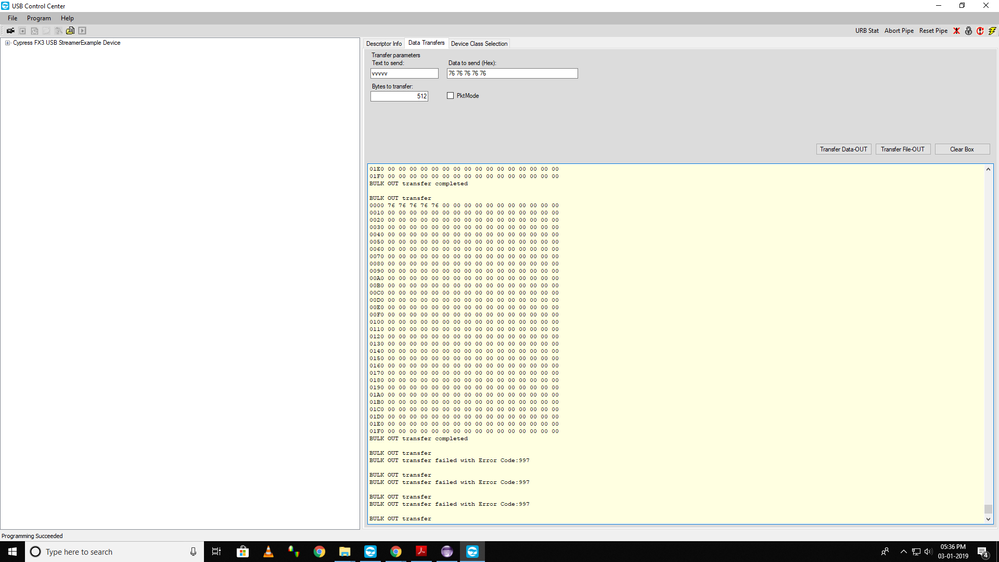

Show LessHello ,

I am working on FX3S and we are Using Slavefiffosync example to communicate with host application .while transferring data from host PC to FX3S after some iteration it is going to above 997 error . after changing the dmaCfg.size, dmaCfg.count, iterations got incremented but at last the result was same 997 error.if we need to

transmit data from PC to FX3S " n" no. of times what i have to do . can any one help me to solve this problem .

Thanks regards

Veerendra.

Show LessHello.

1) Is FX3S in mass production?

2) What are the revisions?

3) There is full support for the USB3 protocol.

4) What is the fundamental difference between the FX3S RAID-on-Chip USB Dongle and FX3S DVK whales? Schemes will be provided after purchase, or where they can be downloaded?

Best regards

Show LessI did on Windows what it said on AN84868 successfully. Now I want to implement the same on my own source code on linux (NEGU93/CYUSB3KIT-003_with_SP605_xilinx)

I am able to program the FX3 device and also send and receive bulk loopback messages as one will do on AN65974. (But with my same cpp code using cyfxbulksrcsink.img) I open a txt file and send it to 0x01 endpoint. I then try to read the same amount of bytes and I get the same exact response back on endpoint 0x81.

I try now to program the FPGA device. I first program the FX3 device with ConfigFpgaSlaveFifoSync.img then I program the FPGA with slaveFIFO2b_fpga_top.bin and it all seams to work correctly. The FX3 device reads the DONE signal and prints via UART that the FPGA was programmed successfully. I even tried with another .bin file that just play with some leds of the FPGA board to check it works fine and it does. However, when I try to send the file in the exact same way I did before (endpoing 0x01 and 0x81) I am able to send the files but I receive no answer.

Moreover, I tried after programming with my code, to use cyusb_linux program and I am able to send but not to receive the file I sent:

I tried both firmwares (the one for FX3 and the FPGA) using "FPGA Configuration Utility" to program both devices and "Control Center" to test the loopbacks on a windows machine and it works perfectly.

I have not many ideas on how to debug. Any advice?

Thank you very much.

Show Less